Preparing Data For Analysis Is Crucial

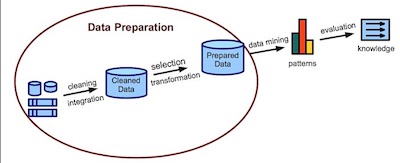

One of the most important aspects of statistics is the data that you have. The reality is that when you get a bunch of data, you need to take the time o prepare it. While this may seem a very simple and fast task, the truth is that it isn’t. Preparing data can be incredibly slow but is extremely important before you start with its analysis.

Looking to run statistic models?

Something that most people tend to assume is that preparing data can b very fast. So, if you are working with a client, it’s important that you explain to him that this is a slow process. Nevertheless, even if you say it will take you one hour, your client will still have unrealistic expectations that it will be a lot faster.

The time-consuming part is preparing the data. Weeks or months is a realistic time frame. Hours is not. Why? There are three parts to preparing data: cleaning it, creating necessary variables, and formatting all variables.

Preparing Data For Analysis Is Crucial

#1: Data Cleaning:

Data cleaning means finding and eliminating errors in the data. How you approach it depends on how large the data set is, but the kinds of things you’re looking for are:

- Impossible or otherwise incorrect values for specific variables

- Cases in the data who met exclusion criteria and shouldn’t be in the study

- Duplicate cases

- Missing data and outliers

- Skip-pattern or logic breakdowns

- Making sure that the same value of string variables is always written the same way (male ≠ Male in most statistical software).

You can’t avoid data cleaning and it always takes a while, but there are ways to make it more efficient. For example, one way to find impossible values for a variable is to print out data for cases outside a normal range.

This is where learning how to code in your statistical software of choice really helps. You’ll need to subset your data using IF statements to find those impossible values. But if your data set is anything but small, you can also save yourself a lot of time, code, and errors by incorporating efficiencies like loops and macros so that you can perform some of these checks on many variables at once.

Understanding the basics of principal component analysis.

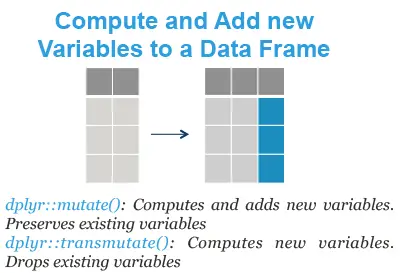

#2: Creating New Variables:

Once the data are free of errors, you need to set up the variables that will directly answer your research questions.

It’s a rare data set in which every variable you need is measured directly.

So you may need to do a lot of recoding and computing of variables.

Examples include:

- Creating change scores

- Creating indices from scales

- Combining too-small-to-use categories of nominal variables

- Centering variables

- Restructuring data from wide format to long (or the reverse)

An introduction to probability and statistics.

#3: Formatting Variables:

Both original and newly created variables need to be formatted correctly for two reasons:

- So your software works with them correctly. Failing to format a missing value code or a dummy variable correctly will have major consequences for your data analysis.

- It’s much faster to run the analyses and interpret results if you don’t have to keep looking up which variable Q156 is.

Learn the basics of probability.

Examples include:

- Setting all missing data codes so missing data are treated as such

- Formatting date variables as dates, numerical variables as numbers, etc.

- Labeling all variables and categorical values so you don’t have to keep looking them up.