What Is Heteroscedasticity?

Heteroscedasticity, which can also be spelled heteroskedasticity, is crucial when you are trying to interpret many things including linear regression. So, today, we decided to take a closer look at heteroscedasticity and see what it is and how you can use it.

Discover everything you need to know about statistics.

What Is Heteroscedasticity?

Simply put, heteroscedasticity is just the extent to which the variance of residuals depends on the predictor variable.

If you remember, the variance refers to the difference between the actual outcome and the outcome that was predicted by your model. Besides, in case you don’t know or simply don’t remember, residuals can be different from the model as well.

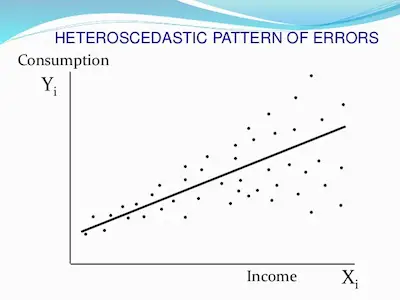

We can then say that the data is heteroskedastic when the amount that the residuals vary from the model changes as the predictor variable changes.

The truth is that many statistics students deal with some difficulties when looking at these definitions and concepts as they are. So, there is nothing like checking an example so you can fully understand what heteroscedasticity is.

Finally understand the significance level.

Heteroscedasticity – An Example

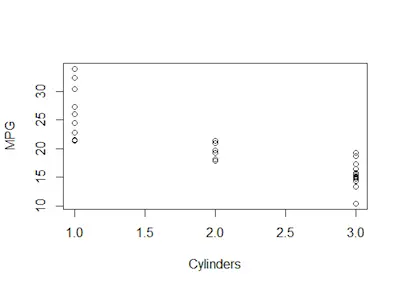

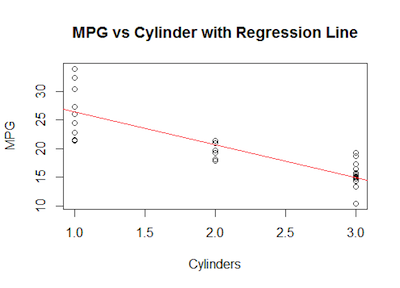

Imagine that you are shopping for a car. One of the most important things people want to know before they buy a new car is the gas mileage. So, with this mind, you decide to make a comparison between the number of engine cylinders to the gas mileage. And you end up with the following graph:

As you can see, there is a general downward pattern. However, at the same time, you can also see that the data points seem to be a bit scattered. It is possible to fit a line of best fit to the data. But there it misses a lot of the data.

If you pay attention to the image above, you can see that the data points are pretty spread out at first. But when you look at the data closer, you see that it spreads out again. This represents heteroscedastic data. So, this means that your linear model doesn’t fit the data very well, so you need to probably adjust it.

Why Do You Need To Care With Heteroscedasticity?

The main reason why heteroscedasticity is important is that it represents data that is influenced by something that you are not accounting for. So, as you can understand, this means that you may need to revise your model since there is something else going on.

Overall speaking, you can check for heteroscedasticity when you compare the data points to the x-axis. When you see that it spreads out, this shows you that the variability of the residuals (and therefore the model) depends on the value of the independent variable. But this is not good for your model. After all, it also violates one of the assumptions of linear regression.

So, whenever this occurs, you need to rethink your model.

Looking to know more about factorial design basics for statistics?

Special Notes

One of the things that many people don’t know is the fact that if the data can be heteroscedastic, it may also be homoscedastic as well.

Simply put, homoscedastic data is when the variability of the residuals don’t vary as the independent variable does. So, if your data are homoscedastic, that is a good thing. It means that your model accounts for the variables pretty well so you should keep it.

One common misconception about hetero- and homo-scedasticity is that it has to do with the variables themselves. But it only has to do with the residuals.