The Distribution of Independent Variables in Regression Models

When you are using regression models, it is normal that you use some distribution assumptions. However, there is one that can’t have any assumptions – the one that refers to independent variables. But why?

The reality is that if you think about it, this makes perfect sense. The reality is that regression models are directional. So, this means that in a correlation, there is no evident direction since Y and X are interchangeable. So, even if you switch the variables, you would end up with the same correlation coefficient.

Use the best statistics calculators.

Nevertheless, it is important to keep in mind that regression is a model about the outcome variable. So, what predicts its value and how well does it predict it? And how much of its variance can be explained by its predictors? If you notice, we are posing questions that are all about the outcome.

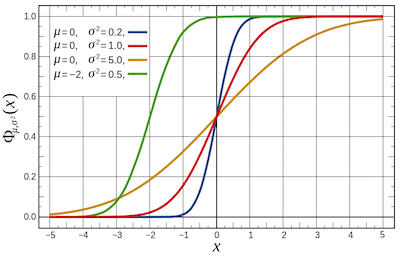

One of the things that you should keep in mind about regression models is the fact that the outcome variable is considered a random variable. So, as you can easily understand, this means that while you can explain or even predict some of its variation you can’t really explain all of it. After all, it is subject to some sort of randomness that affects its value in any particular situation.

In what concerns predictor variables, this isn’t true. After all, when we are talking about predictor variables, we are talking about variables that assume to have no random process. So, there are absolutely no assumptions about the distribution of predictor variables. Besides, they don’t have to be normally distributed, continuous, or even symmetric. But you still need to be able to interpret their coefficients.

Discover how to calculate the p-value for a student t-test.

Analyzing The Distribution of Independent Variables in Regression Models

#1: You need to have a one-unit difference in X. In case X is numeric and continuous, then a one-unit difference in X makes perfect sense. On the other hand, if X is numeric but discrete, then a a one-unit difference still makes sense.

If X is nominal categorical, a one-unit difference doesn’t make much sense on its own. A simple example of this variable is Gender. In case you code the two categories of Gender to be one unit apart from each other, as is done in dummy coding, or one unit apart from the grand mean, as is done in effect coding, you can force the coefficient to make sense.

But what if X is ordinal–ordered categories? There is no clever coding scheme that can preserve the order, but not treat all the one-unit differences as equivalent. So while there are no assumptions that X are not ordinal, there is no way to interpret coefficients in a meaningful way. So you are left with two options–lose the order and treat it as nominal or assume that the one-unit differences are equivalent and treat it as numeric.

Discover how to calculate the t-statistic and degrees of freedom.

#2: While the structure of Y is different for different types of regression models, as long as you take that structure into account, the interpretation of coefficients is the same. This means that although you need to have to take the structure of Y into account, a dummy variable or a quadratic term works the same way in any regression model.

#3: The unit in which X is measured matters. This might be useful to conduct a linear transformation on X to change its scaling.

Learn how to calculate the two-tailed area under the standard normal distribution.

#4: The other terms in the model matter. Some coefficients are interpretable only when the model contains other terms. For example, interpretations aren’t interpretable without the terms that make them up (lower-order terms). And including an interaction changes the meaning of those lower-order terms from main effects to marginal effects.